For identifying security flaws in Google Home smart speakers that could be used to install backdoors and convert them into wiretapping devices, a security researcher was given a bug prize of $107,500.

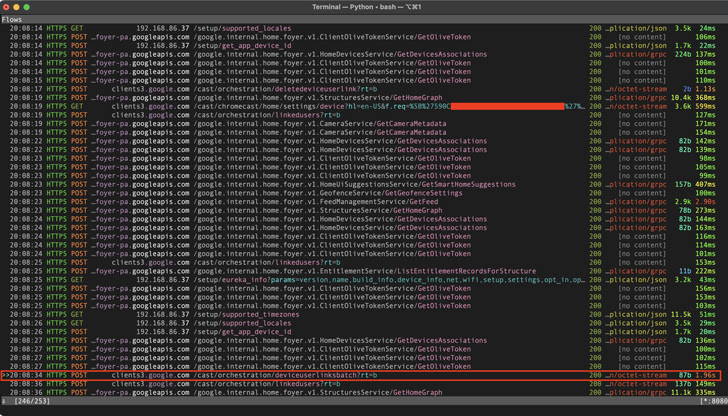

The researcher, who goes by the name Matt Kunze, revealed the flaws in a technical write-up released this week. The flaws “allowed an attacker within wireless proximity to install a ‘backdoor’ account on the device, enabling them to send commands to it remotely over the internet, access its microphone feed, and make arbitrary HTTP requests within the victim’s LAN.”

By sending such malicious queries, the attacker might not only learn the Wi-Fi password but also gain direct access to other devices using the same network. Google corrected the problems in April 2021 after making a responsible disclosure on January 8, 2021.

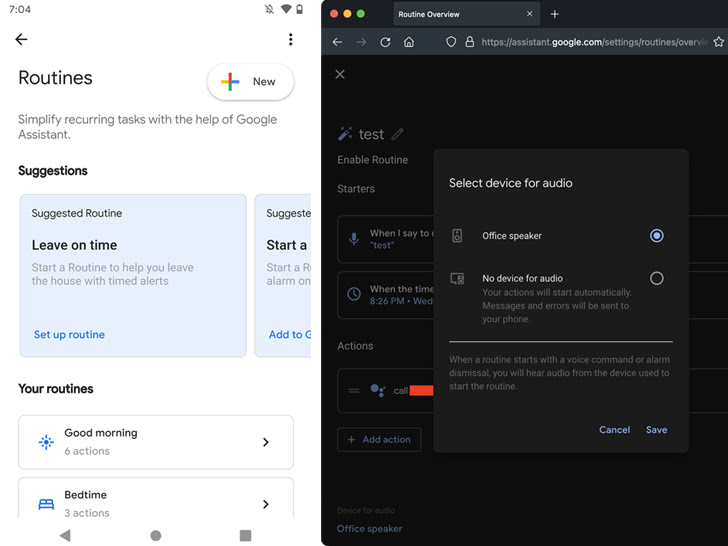

In a nutshell, the issue is that a malicious Google user account can be added to a target’s home automation system by utilizing the Google Home software architecture.

In a series of attacks described by the researcher, a threat actor wishing to eavesdrop on a victim can persuade them to install a malicious Android app, which, when it discovers a Google Home device on the network, sends covert HTTP requests to connect the attacker’s account to the victim’s device.

A step further revealed that a Google Home device could be induced to enter “setup mode” and establish its own open Wi-Fi network by conducting a Wi-Fi deauthentication attack to force it to detach from the network.

In order to attach their account to the device, the threat actor can then connect to the device’s setup network and request information such as the device name, cloud device id, and certificate.

Whatever attack sequence is used, a successful link procedure enables the adversary to take advantage of Google Home routines to mute the device’s volume to zero and contact a specified phone number at any time to spy on the victim through the microphone.

The victim might simply see that the LEDs on the gadget change solid blue, but they’d probably just assume it was updating the firmware, according to Kunze. There is no indication that the microphone is open during a call because the LEDs do not pulse as they usually do when the device is listening.

The attack can also be expanded to read files from the linked device or perform malicious alterations that would take effect after a reboot, all while operating within the victim’s network and making arbitrary HTTP queries.

An invite-based method to link a Google account using the API and the blocking of remote call command initiation using routines are two of the updates made available by the internet giant to address the problems.

This is not the first time that attack strategies to secretly eavesdrop on potential targets using voice-activated gadgets have been developed.

A team of academics unveiled a method in November 2019 called “Light Commands,” which refers to a MEMS microphone flaw that enables attackers to remotely use light to inject invisible and inaudible commands into well-known voice assistants like Google Assistant, Amazon Alexa, Facebook Portal, and Apple Siri.